Google and Stanford Researchers Used ChatGPT To Invent a Small Virtual Town

Who used to play? The Sims can tell you: That game can get horribly chaotic. When leaving their own device, it is your Sim’s responsibility to do anything from urinating by yourself, ARRIVE starveby chance set yourself up (and their children) on fire.

This level of “free will” as the video game calls it, it offers an interesting if somewhat unrealistic simulation of what someone might do if you just left them alone and watched them as an omniscient and unscrupulous god. care from afar. As it turns out, that’s also the inspiration behind a new study that simulates human behavior using ChatGPT.

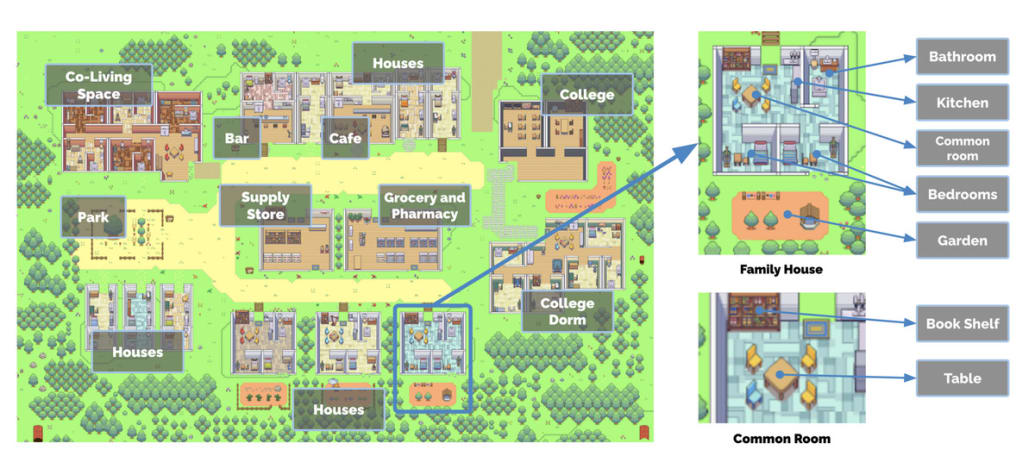

A group of AI researchers at Google and Stanford University posted an online study on April 7 where they use OpenAI’s chatbot to generate 25 “generation agents,” or unique individuals with identities and goals, and place them in a sandbox-like environment called Smallville like a town called Smallville. The Sims. The study’s authors (which have not been peer-reviewed) observed agents as they went about their day, went to work, talked to each other, and even planned activities.

The bots and their virtual environments are rendered as cool 16-bit sprites, giving it the look and feel of a video game. The result is a rather idyllic village that seems to have been ripped out of Harvest Moon or Cross Beast—if you know, those games are also filled with extremely complicated and uncomfortable moral and existential questions.

Smallville’s world is based entirely on the big language model.

Screenshots/Handouts

“Agents wake up, cook breakfast and go to work; the artist draws, while the author writes; they form opinions, notice each other, and initiate conversations; they remember and reflect on days gone by as they plan for the next day,” the authors write.

To create the agent, the team gave ChatGPT a one-shot prompt that included the character’s memories, goals, relationships, and work. As characters travel through their virtual world, they can become aware of their surroundings and make decisions based on their memories, setting, and how other characters interact with them.

For example, there is the character John Lin. He is a pharmacy owner who lives with his wife, university professor Mei, and son Eddy who is studying music theory at school. The authors observed John start his day based on this initial reminder, waking up at exactly 7 a.m. before brushing his teeth, showering, and eating breakfast before watching the news. He then greeted his son Eddy and his wife Mei before going to work at the pharmacy. Just another day in heaven for the Lin family.

However, where things get really interesting is when researchers start to witness emerging behavior or unclear or unwanted side effects of the AI system. This includes cases where characters share new information with each other which they then pass on to other agents.

The citizens of Smallville interact with each other and their environment at a local diner.

Screenshots/Handouts

For example, a character named Sam Moore told another named Tom Moreno that he wanted to run for mayor of Smallville. Tom then talks to John, who has also heard the news about Sam’s political aspirations.

From research:

John: I heard that Sam Moore is running for mayor in the local election. Do you think he has a good chance to win?

Tom: I think he has a good chance. He has worked hard in the community and I think he will get a lot of support. What do you think?

John: I think it’s great that he’s running. I’m curious to see who else is running and how the election goes.

Interestingly, the interactions seem to go beyond simple conversations and into the fascinating realm of classic gossip. At one point, Tom confided in a character named Isabella Rodriguez that not only did he think Sam was “out of the community” and not interested in “their best interests,” but that he didn’t like it. Sam. . It’s the kind of dirty drama that can make something like this popular for a long time. Real housewives-type of reality TV show on Twitch.

Another emerging behavior includes relational memory, where observed agents form new relationships with each other. For example, Sam ran into Latoya Williams at the park and introduced himself. Latoya said she was working on a photography project—and then Sam asked her how the project would go when they met later.

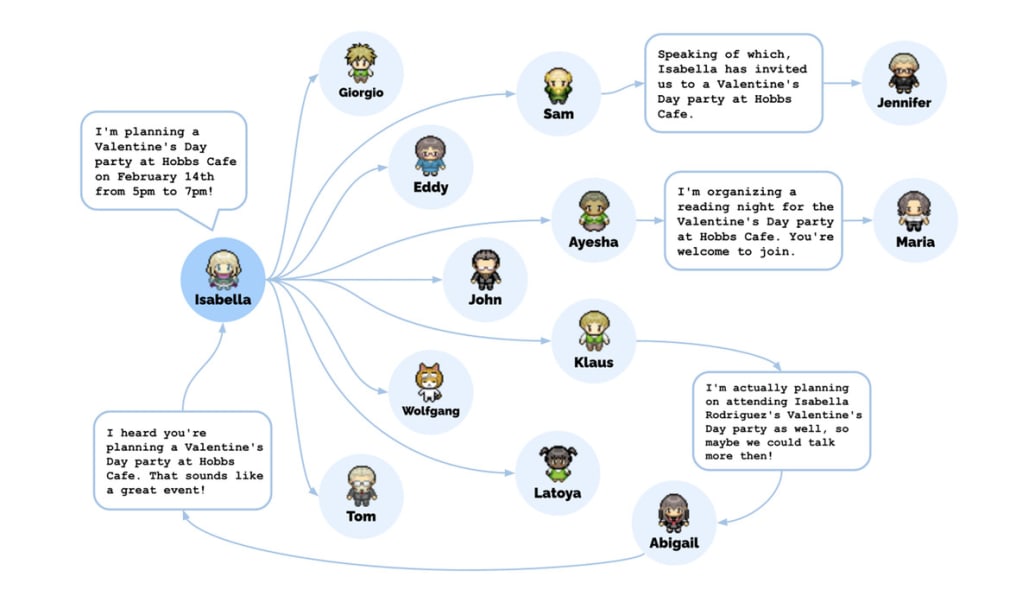

The people of Smallville also seem to have the ability to coordinate and organize with one another. Isabella’s initial prompt included that she wanted to throw a Valentine’s Day party. As the simulation begins, her character begins to invite Smallvillians to the party. Word spread about the event, people started asking each other out on dates to the party, and they all showed up without being reminded in the first place.

At the heart of the research is a ChatGPT-connected memory access architecture that allows agents to interact with each other and with the virtual world around them.

Screenshots/Handouts

At the heart of the research is a ChatGPT-connected memory access architecture that allows agents to interact with each other and with the virtual world around them.

The authors wrote: “Agents are aware of their environment, and all perceptions are stored in a comprehensive record of the agent’s experience known as a memory stream.” “Based on their perception, the architecture retrieves the associated memories, then uses those retrieved actions to identify an action.”

All in all, nothing bad or bad happens like having your house burn down because your character is too busy playing video games to notice the fire in the kitchen like in The Sims. Like any good backward, though, Smallville is a seemingly idyllic community that hides some big, hairy, consequential moral problems beneath its 16-bit shell. .

The study’s authors noted a number of social and ethical considerations with their research. For one, there is a risk that people develop unhealthy social parasitic relationships with artificially created agents like Smallville. While this may seem silly (who would form a relationship with a video game character?), we’ve seen real-life examples of parasitic social relationships being formed. with chatbots leads to deadly results.

March, a man in Belgium committed suicide after developing a “relationship” with an AI chatbot app called Chai and chatted obsessively with it about him climate extermination and ecological concerns. The culmination is that the chatbot suggests that he should kill himself and claims that it can save the world if he dies.

In less extreme but equally disturbing examples, we’ve seen users talk about early versions of Microsoft’s Bing Chatbot hours—so much so that users reported bots doing everything from fall in love with them to threatened to kill them.

It’s easy to look at these things and believe there’s no way you could believe such things. However, some new studies suggest that we underestimate the ability of chatbots to influence even our ethical decision making. You may not even realize it when a chatbot is convincing you to do something you wouldn’t normally do until it’s too late.

The authors offer typical ways to mitigate these risks, including stating that bots are really just bots and that such generic agency system developers should include railings to ensure that bots don’t talk about inappropriate or ethical issues. Of course, that raises a series of questions like who is going to decide what is appropriate or ethical in the first place?

Irina Raicu, director of the internet ethics program at Santa Clara University, noted to The Daily Beast that one big problem that could come from a system like this is its use in archetypes—which the authors say. The authors of the study noted one potential application.

For example, if you’re designing a new dating app, you can use a generic agent to test the app instead of finding users to do it for you to save money and resources. Sure, it’s a small bet if you’re just creating something like a dating app—but can have far bigger consequences if you’re using an AI agent for something like medical research. learn.

Of course, that comes with a big cost and presents a clear ethical issue. When you get the user out of the loop completely, what you’re left with are products and systems designed for simulated users—not real people, you know. use it. This issue has recently made waves of UX designers and researchers on Twitter after the designer Sasha Costanza-Cock shared a screenshot of an AI user product.

“The question is what to prototype — and whether, for the sake of efficiency or cost-cutting, we might end up implementing poor man simulation instead of, for example, pursuing the interactions that need to be with the living, breathing potential stakeholders who will be impacted by the product,” Raicu said in an email.

In recognition of their merits, the Smallville study’s authors suggest that generating agents “never substitute for real human input in research and design processes. Instead, they should be used to prototype ideas in the early stages of design when gathering participants can be challenging or when testing theories is difficult or risky to experiment with. with real participants.”

Of course, that places a lot of responsibility on companies in doing the right thing—which, if history is any indication, is not going to happen.

It’s also important to note that ChatGPT isn’t exactly designed for this kind of person-to-person simulation either—and its use by agents creating something like a video game might be impractical to the point of impracticality. use.

However, the research shows the power of generalized AI models like ChatGPT and the enormous consequences it can have for simulating human behavior. If AI gets to the point where large companies and even academia feel comfortable using it for things like prototyping or as part of research to replace humans, then all of us We may suddenly find ourselves using products and services that aren’t necessarily designed for us—but for us. Robots are made to emulate us.

Knowing all that, maybe it’s better if we stick around The Sims—where you know that if your house burns down or you just lost your job, you can at least quit the game and start over.